Financial services have always been one of the most interesting sources of messaging innovation because of the natural fit of their demands to messaging systems. Financial professionals, particularly traders, need to process significant amounts of information, and there is a constant stream of offers and deals being made against the stocks and derivatives available. Dealing with this kind of data coming from different exchanges - where the deals are actually made - and adding in extra pertinent information, such as financial news, means a trader is likely to be interested in a diverse range of updates, which they need access to as quickly as possible. As the markets have become more computerised, moving away from making trades person-to-person on trading floors, and more automated, where trading decisions are made or suggested by algorithms, this need has become even stronger.

In 1975, Vivek Ranadivé came from Mumbai to MIT in the United States to study electrical engineering. He went on to work for Ford, Linkabit (a networking company), and then gained an MBA from the Harvard Business School, where he hit on the idea of developing a way to distribute data differently:

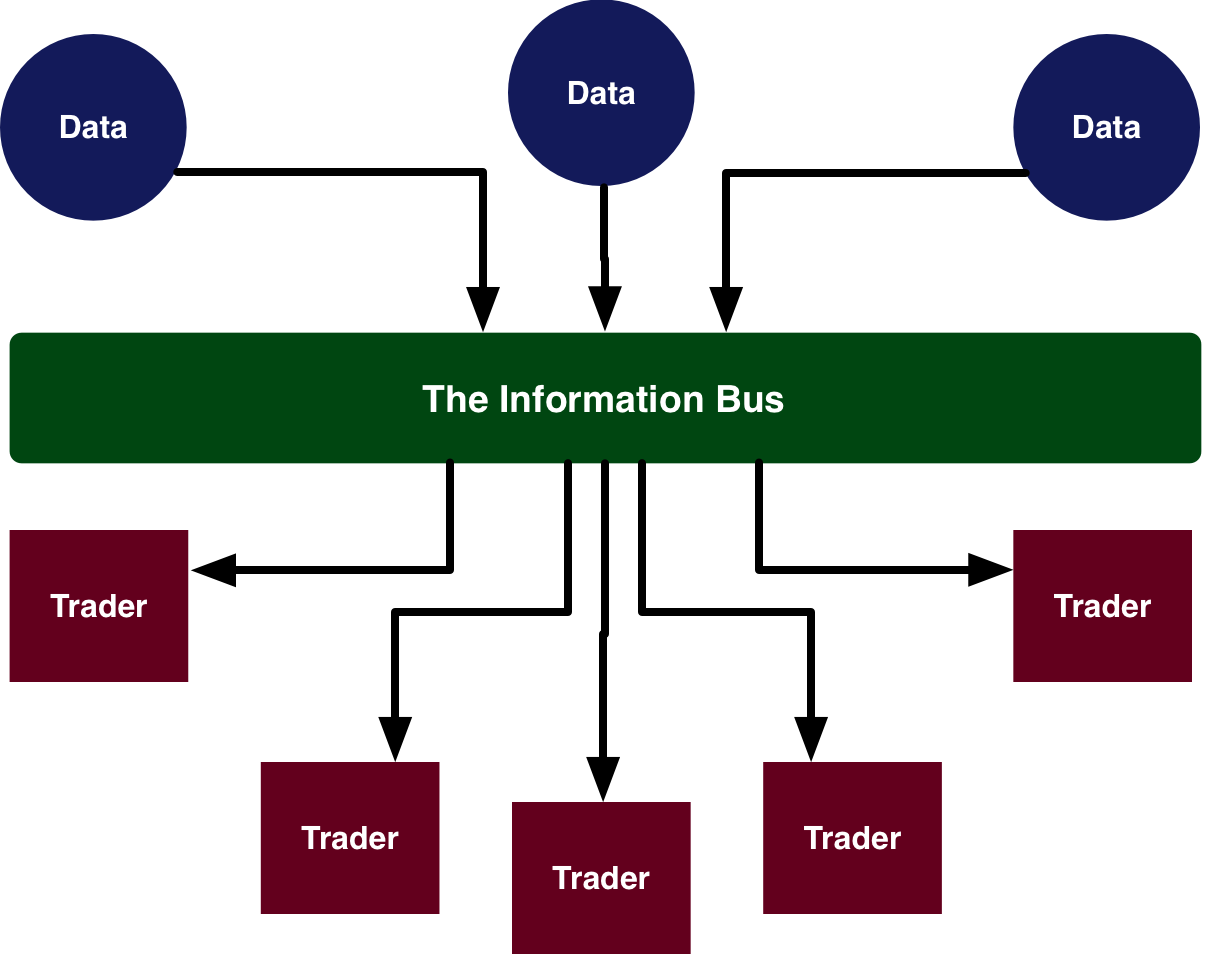

“My background was as a hardware engineer. If you look inside a computer, there’s a back plane, or bus, with cards that plug in to run the machine’s functions. My idea was to create a software bus and plug applications into that.”

- Vivek Ranadivé1

The idea was manifested as TIB - The Information Bus, and at its heart was a Publish Subscribe or Pub/Sub system. Most network applications at the time followed a client-server, or request/response model where a client would make a request to a server, and a receive a reply. This was limiting in the financial cases in particular because the server could only send information when the client requested it, and clients had to keep track of which servers they needed to access to request different types of data. This worked well for making software that was driven by users doing things, but didn’t work so well when software needed to be driven by events happening elsewhere. Several ‘push’ technologies had been created that were really no more than the client polling the server. Polling was just making a request to the server to see whether there had been any changes at a regular interval in the background. This was still somewhat slow to respond, and put a significant load on both the network and the server handling the requests.

In a Pub/Sub system as Ranadivé developed with his company Tibco in the late 80s (originally under the name Teknekron Software Systems, later renamed for “The Information Bus Company”), clients become consumers or subscribers, registering their interest in types of information. Publishers produced messages as and when appropriate data became available, and the ‘information bus’ delivered the messages to all interested consumers.

Subscribers registered their interest against specific topics, which were usually strings of characters indicating what type of data was contained within a message. Publishers indicated which topics their messages were covered by. This was a major improvement on the previous model of data delivery, which was more like sending an email to a specific email address. With topics, it worked like a mailing list, where a copy of the same message could be sent to everyone interested in the subject of that list.

Before this system each trader on a trading floor would get a bit of everything - someone who only dealt with tech stocks would still get to see the data about agriculture, for example. By moving to pub sub, traders could get customised views of exactly what they were interested in, by subscribing to specific feeds of data.

This was hugely beneficial in that it simplified the mountain of different systems that financial traders had to deal with. Rather than having each system require its own client or terminal, simple adaptors could be written, much like the ARPANET IMPs, that integrated with financial news and market data systems, processed them into messages and pushed them on to the information bus. It made the coupling between the data sources and the data consumers very loose, and meant the difficulty of scaling up the number of connections and volumes of data involved was mainly a challenge of scaling the information bus, which was significantly simpler than upgrading each individual producer.

In a traditional network, packets would be routed towards the target system based on the target’s IP address, a 32 bit number that is unique to a given host on the network. Routers could learn where to send messages for certain ranges of IP address by publishing and listening for the IP addresses they were aware of and their distance to them in terms of number of hops. Routing a packet just involved looking up the target IP address in a table of known ranges, and choosing the network port with the shortest distance. If that path became unavailable or congested, the router could pick a different option, and send the packet onwards. The Information Bus worked fundamentally differently. By building a layer on top of the existing network, it could ignore the problems of mapping addresses to routes, and focus on mapping addresses to the topics they were interested in.

The Information Bus servers became additional routers, knowing which clients were interested in which topics, matching the incoming messages to the subscriptions, and sending the messages on to all interested parties. In Pub/Sub topic based addressing, there is no single endpoint which is being targeted, but an ever changing collection of receivers. In many ways, subscribing to a topic was really a signal to join this group, and on receiving the signal the messaging system could take steps to optimise delivery for the newly enlarged group. Some subsequent systems have used even more abstract routing, such as content based addressing where the message is routed to certain receivers based on a complex query that inspects the specific contents of a message - though this generally comes at a significant cost in terms of performance or resources required to push the messages. Pub/Sub took the (established) ideas of message queuing and message passing and made them more powerful by adding more flexible addressing.

A> Like many great ideas, messaging had occurred to several engineers over the years, sometimes more than once: A> “By 1986 we had discovered no less than 17 separate, completely independent examples of yet another group inside DEC “inventing” message queuing! Each independent “inventor” seemed to go through the same process of denial and emotion when they discovered that they were neither the only person nor the first person to come up with the idea for message queuing. I don’t know of any greater example of Not Invented Here Syndrome than the message queuing wars that went on inside DEC in the mid ‘80s!” - Erik Townsend2

Middleware

Tibco’s TIB, and later Rendezvous, software was used by a number of banks and financial companies, and extended into other businesses that could benefit from flexible distribution of data. However, they were far from the only provider, and the term Message Oriented Middleware started to be used for the stack of software services which distributed the messages. IBM developed their WebsphereMQ product, Microsoft released their entry, MSMQ, and a host of other companies started selling in to this market.

The middleware, as the name implies, is the software that sits in the middle and actually handles the message distribution. The model defined by Tibco and others was to have services known as message brokers receive the messages directly from the producers or publishers, perform any transformations or filtering required, and then route the messages on to subscribers. The broker would usually offer a degree of persistence, so that consumers could come and go and the broker would still try to deliver messages that they might have missed. In this way, brokers provided both message queuing - often one queue per topic, or per consumer, and message passing where they would facilitate the actual delivery of the message from end-to-end. Most brokers would also include various degrees of failure recovery, configurability, audit logging, security filtering and other features needed by the major clients of the time.

Some services, such as IBMs MQSeries or Oracle’s Advanced Queue would use a central server to provide the middle layer. The method used by Tibco’s RV system, and to degree others such as Talarian’s Smart Sockets, was to have many small services running that could provide local queueing for application data, and then communicate with each other - intelligently falling back to alternative brokers in the event of failure, for example.

Having part of the broker on each node meant the system was distributed, and peer-to-peer. When a node joined, it located nearby nodes and was capable of passing information to others, depending on the relative cost of accessing them. In this way, TIBCO implemented a messaging network which overlaid the TCP or UDP network that it was based on.

This used multicast between the services, a topic we’ll come to a little later. The downside of the local model was the extra hops between different the different nodes, which added latency. For much of the usage at the time, the impact was fairly negligible, only becoming more of an issue later as the volumes started increasing and demands for reaction times below the human scale mounted - again a topic worth revisiting.

Event Driven

Ranadivé’s vision was to simplify the lives of the traders who would have to sort through reams of information and make timely decisions, often for significant amounts of money. He saw traders as being on the front lines in a wider organisational change that would see companies move towards being more event driven. Normal behaviour would be handled as a matter of course, automatically, and only exceptional circumstances would generate events, communicated through messages, which would register at the desks of everyone that needed to know about them, via the information bus.

Everyone at a company would become the equivalent of a trader, tuning their consumption of knowledge and acting quickly and independently to do their job as effectively as possible. While the impact of the organisational idea is for business management experts to debate, the software idea proved very powerful. Systems which respond to events are an important use case for messaging systems of all types, and have found their way into many, if not most, large infrastructures.